Point Estimation Of The Population Mean

listenit

Mar 24, 2025 · 7 min read

Table of Contents

Point Estimation of the Population Mean: A Comprehensive Guide

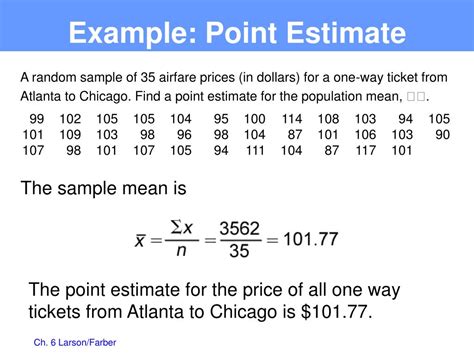

Point estimation is a crucial concept in inferential statistics, forming the bedrock of many statistical analyses. It involves using sample data to arrive at a single value – a point estimate – that serves as the best guess for an unknown population parameter. This article dives deep into point estimation of the population mean, exploring various methods, their properties, and practical considerations. We'll cover everything from understanding the basics to tackling more complex scenarios, ensuring a comprehensive understanding suitable for both beginners and those seeking a refresher.

Understanding the Population Mean and Sample Mean

Before delving into point estimation, let's clarify the core concepts involved. The population mean (μ) represents the average value of a variable across the entire population. However, accessing the entire population is often impractical, expensive, or even impossible. This is where sampling comes in. A sample is a subset of the population, and the sample mean (x̄) is the average of the values in that sample. The sample mean serves as our estimator for the population mean.

The Importance of Random Sampling

The reliability of our point estimate hinges critically on the method of sampling. Random sampling is essential. This ensures that every member of the population has an equal chance of being selected, minimizing bias and allowing us to generalize findings from the sample to the population. Non-random sampling can lead to severely biased estimates, rendering the point estimate meaningless.

Methods of Point Estimation for the Population Mean

Several methods exist for estimating the population mean, each with its strengths and weaknesses. The choice of method often depends on the characteristics of the data and the level of prior knowledge available.

1. The Sample Mean as an Estimator

The most straightforward and commonly used method is to use the sample mean (x̄) as a point estimate for the population mean (μ). This is an intuitive approach: the average of the observed values provides a reasonable guess for the overall average. The sample mean is both an unbiased and consistent estimator, making it a reliable choice in many situations.

-

Unbiased: The expected value of the sample mean is equal to the population mean (E[x̄] = μ). This means that, on average, the sample mean will accurately reflect the population mean.

-

Consistent: As the sample size increases, the sample mean converges towards the population mean. Larger samples generally yield more accurate estimates.

2. Maximum Likelihood Estimation (MLE)

Maximum Likelihood Estimation is a more sophisticated method that selects the parameter value that maximizes the likelihood function. The likelihood function represents the probability of observing the obtained sample data given a particular value of the parameter. In the case of estimating the population mean, the MLE is also the sample mean (x̄). While seemingly redundant in this specific case, MLE becomes more powerful when dealing with more complex distributions and parameters.

3. Method of Moments (MoM)

The Method of Moments equates sample moments (like the sample mean and variance) to their corresponding population moments. For the population mean, the MoM also yields the sample mean (x̄) as the point estimate. This method is particularly useful when dealing with distributions whose parameters are not easily estimated using other methods.

Properties of Good Estimators

A good point estimator should possess certain desirable properties:

1. Unbiasedness

An unbiased estimator has an expected value equal to the true parameter value. As mentioned earlier, the sample mean is an unbiased estimator of the population mean. Unbiasedness ensures that the estimator doesn't systematically overestimate or underestimate the parameter.

2. Efficiency

Efficiency refers to the estimator's precision. A more efficient estimator has a smaller variance, meaning its estimates are clustered more tightly around the true parameter value. While the sample mean is unbiased, its efficiency can be improved by employing techniques like stratified sampling or cluster sampling if the population exhibits significant heterogeneity.

3. Consistency

A consistent estimator converges in probability to the true parameter value as the sample size increases. The sample mean is consistent, meaning that with larger samples, the estimate becomes increasingly accurate.

4. Sufficiency

A sufficient estimator captures all the information about the parameter contained in the sample. The sample mean is a sufficient statistic for the population mean when the data is normally distributed. This means no additional information from the sample can improve the estimation of the mean.

Confidence Intervals: Beyond Point Estimation

While point estimation provides a single value as an estimate, it doesn't quantify the uncertainty associated with the estimate. This is where confidence intervals come into play. A confidence interval provides a range of values within which the true population mean is likely to fall with a specified level of confidence (e.g., 95%). The width of the confidence interval reflects the uncertainty in the estimate; wider intervals indicate greater uncertainty. The formula for constructing a confidence interval for the population mean utilizes the sample mean, standard error, and a critical value from the appropriate distribution (usually the t-distribution for smaller sample sizes and the z-distribution for larger samples).

Dealing with Different Data Distributions

The methods discussed so far assume certain characteristics of the data. However, real-world data often deviates from these assumptions. Let's examine scenarios where the data might not follow a normal distribution:

Non-Normal Data

When data significantly deviates from normality, the sample mean might not be the most efficient estimator. Robust estimators, less sensitive to outliers and non-normality, become more appropriate. Examples include the median or trimmed mean. The median, for instance, is particularly useful when dealing with skewed distributions, as it's less influenced by extreme values.

Small Sample Sizes

With small sample sizes, the t-distribution is preferred over the z-distribution when constructing confidence intervals. The t-distribution accounts for the additional uncertainty introduced by the smaller sample size.

Population Variance Unknown

When the population variance (σ²) is unknown, it's estimated using the sample variance (s²). This introduces additional uncertainty, reflected in the use of the t-distribution and the inclusion of the sample standard deviation in the confidence interval calculation.

Advanced Topics

The world of point estimation extends far beyond the basics. Several advanced topics warrant further exploration:

Bayesian Estimation

Bayesian estimation incorporates prior knowledge about the parameter into the estimation process. It uses Bayes' theorem to update the prior belief based on the observed data, resulting in a posterior distribution that represents the updated belief about the parameter. This approach is particularly useful when prior information is available or when dealing with small sample sizes.

Bootstrapping

Bootstrapping is a resampling technique used to estimate the sampling distribution of a statistic, such as the sample mean. It involves repeatedly drawing samples with replacement from the original sample, calculating the statistic for each resample, and then using the distribution of the resampled statistics to estimate properties like the variance of the estimator. Bootstrapping is particularly helpful when the underlying distribution is unknown or when theoretical methods are difficult to apply.

Generalized Estimating Equations (GEEs)

GEEs are used to analyze correlated data, such as longitudinal data or data from clustered samples. They provide a robust method for estimating population means in the presence of correlation, which violates the assumption of independent observations underlying many standard estimation methods.

Conclusion

Point estimation of the population mean is a fundamental concept in statistics. While the sample mean often serves as a simple and effective estimator, understanding its properties and limitations is crucial. The choice of estimation method should be tailored to the specific characteristics of the data and the research question. Furthermore, the integration of confidence intervals and consideration of advanced techniques like Bayesian estimation and bootstrapping greatly enhances the robustness and interpretability of the analysis. By mastering the principles of point estimation, researchers can extract meaningful insights from data, paving the way for informed decision-making and accurate generalizations about the population under study.

Latest Posts

Latest Posts

-

What Is The Properties Of Gases

Mar 26, 2025

-

How To Find The Y Intercept Of A Quadratic Function

Mar 26, 2025

-

Dna Replication Takes Place During Which Phase

Mar 26, 2025

-

What Is The Symbol For Beryllium

Mar 26, 2025

-

The Diagonals Of A Rhombus Are Perpendicular

Mar 26, 2025

Related Post

Thank you for visiting our website which covers about Point Estimation Of The Population Mean . We hope the information provided has been useful to you. Feel free to contact us if you have any questions or need further assistance. See you next time and don't miss to bookmark.