What Is The Symbol For Entropy

listenit

Apr 03, 2025 · 6 min read

Table of Contents

- What Is The Symbol For Entropy

- Table of Contents

- What is the Symbol for Entropy? A Deep Dive into Thermodynamics and Information Theory

- The Ubiquitous Symbol: S

- Entropy in Thermodynamics: A Classical Perspective

- Calculating Entropy Change in Thermodynamics

- Microscopical Interpretation of Entropy

- Entropy in Information Theory: A Different Perspective, Same Symbol

- Shannon Entropy: Quantifying Information Uncertainty

- The Connection Between Thermodynamic and Information Entropy

- Why 'S' for Entropy? A Speculative History

- Beyond the Symbol: Understanding the Essence of Entropy

- Entropy in Everyday Life

- Conclusion: The Significance of S

- Latest Posts

- Latest Posts

- Related Post

What is the Symbol for Entropy? A Deep Dive into Thermodynamics and Information Theory

Entropy, a cornerstone concept in both thermodynamics and information theory, represents the degree of disorder or randomness within a system. While the underlying principles differ slightly between these fields, the core idea remains consistent: a higher entropy value indicates greater disorder. But what symbol universally represents this crucial concept? The answer, while seemingly simple, opens the door to a fascinating exploration of entropy's multifaceted nature.

The Ubiquitous Symbol: S

The most common and widely accepted symbol for entropy is S. This convention is used across diverse scientific disciplines, from physics and chemistry to computer science and engineering. While the reason for choosing "S" isn't definitively documented as a single, universally agreed-upon event, its association with entropy is firmly entrenched in the scientific literature. The choice likely stems from its early adoption by prominent scientists who laid the foundations of thermodynamics and statistical mechanics.

Entropy in Thermodynamics: A Classical Perspective

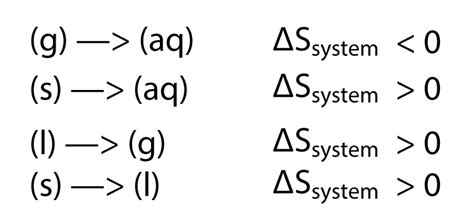

In classical thermodynamics, entropy is a state function, meaning its value depends only on the current state of the system, not on the path taken to reach that state. The second law of thermodynamics dictates that the total entropy of an isolated system can only increase over time, or remain constant in ideal cases of reversible processes. This fundamental principle governs the directionality of natural processes, explaining why heat spontaneously flows from hot to cold objects and why irreversible processes are always accompanied by an increase in entropy.

Calculating Entropy Change in Thermodynamics

The change in entropy (ΔS) of a system undergoing a reversible process can be calculated using the following equation:

ΔS = ∫(δQ/T)

where:

- ΔS represents the change in entropy

- δQ represents an infinitesimal amount of heat transferred reversibly to the system

- T represents the absolute temperature at which the heat transfer occurs

This equation highlights the relationship between entropy, heat, and temperature. A larger amount of heat transferred at a lower temperature leads to a larger increase in entropy.

Microscopical Interpretation of Entropy

While the macroscopic definition of entropy provides a practical way to calculate its changes, a more profound understanding comes from the microscopic perspective offered by statistical mechanics. Here, entropy is related to the number of possible microstates (microscopic configurations) consistent with the system's macroscopic state. A system with many possible microstates has higher entropy than a system with fewer microstates.

This probabilistic interpretation of entropy connects the seemingly abstract concept to the inherent randomness of microscopic particle arrangements. The Boltzmann equation formalizes this connection:

S = k<sub>B</sub> ln W

where:

- S is the entropy

- k<sub>B</sub> is the Boltzmann constant (a fundamental physical constant)

- W is the number of microstates corresponding to the system's macrostate

This equation elegantly connects the macroscopic thermodynamic property of entropy (S) to the microscopic number of accessible microstates (W). A larger W signifies higher disorder and, consequently, higher entropy.

Entropy in Information Theory: A Different Perspective, Same Symbol

While originating in thermodynamics, the concept of entropy found a surprisingly apt application in information theory, pioneered by Claude Shannon. Here, entropy quantifies the uncertainty or randomness associated with information. It measures the average amount of information needed to describe the outcome of a random variable.

Shannon Entropy: Quantifying Information Uncertainty

In information theory, entropy (again, symbolized by S or sometimes H) is defined as:

H(X) = - Σ P(x<sub>i</sub>) log<sub>2</sub> P(x<sub>i</sub>)

where:

- H(X) represents the Shannon entropy of the random variable X

- P(x<sub>i</sub>) represents the probability of the i-th outcome of X

- Σ denotes summation over all possible outcomes

This equation quantifies the average amount of information (in bits) needed to specify the outcome of a random variable. A higher entropy value indicates greater uncertainty or randomness in the information source. For instance, a fair coin toss (equal probabilities for heads and tails) has higher Shannon entropy than a biased coin (significantly higher probability for one outcome).

The Connection Between Thermodynamic and Information Entropy

Although arising from different contexts, thermodynamic and information entropy share a profound conceptual similarity. Both quantify disorder or randomness, albeit in different realms. The connection lies in the underlying probabilistic nature of both systems. The thermodynamic entropy reflects the randomness of microscopic particle arrangements, while information entropy reflects the randomness of information signals. This parallel underscores the universality of the entropy concept as a measure of uncertainty and disorder.

Why 'S' for Entropy? A Speculative History

While a definitive historical account explaining the choice of "S" for entropy is elusive, several factors likely contributed:

-

Rudolf Clausius: A pioneering figure in thermodynamics, Clausius is credited with introducing the concept of entropy and formulating the second law of thermodynamics. While he didn't explicitly use "S," his work laid the groundwork for the later adoption of this symbol. The letter "S" might have been chosen by subsequent researchers based on the German word for entropy (which, while not directly translated to "S," might have been related to similar-sounding words starting with the same letter).

-

Simplicity and Universality: The letter "S" is simple, concise, and easily recognizable across different languages and scientific communities. Its adoption as the standard symbol ensured consistency and facilitated clear communication within the scientific world.

-

Absence of Preexisting Symbol Conflicts: At the time the symbol was established, "S" likely did not have a strongly competing meaning within the field of thermodynamics, minimizing potential confusion and ambiguity.

Beyond the Symbol: Understanding the Essence of Entropy

While the symbol "S" serves as a convenient shorthand for entropy, it's crucial to understand the underlying concept. Entropy isn't merely a mathematical formula; it's a fundamental property of systems, reflecting the inevitable trend towards disorder and randomness in the universe. Its impact extends far beyond physics and chemistry, influencing diverse fields like biology, economics, and computer science.

Entropy in Everyday Life

The concept of entropy is far from confined to the lab or theoretical physics. We encounter its manifestations daily:

-

Room cleaning: The act of tidying up a room is a direct struggle against entropy. We're actively reducing the disorder by arranging objects into an organized state.

-

Aging: The aging process is, to some extent, a consequence of entropy. The body's complex systems degrade over time, demonstrating a gradual increase in disorder.

-

Technological innovation: The constant development of new technologies, partly aims at mitigating the effects of entropy by creating more efficient and ordered systems.

Conclusion: The Significance of S

In conclusion, while the origin of the symbol "S" for entropy remains partly shrouded in history, its widespread adoption reflects its importance in various scientific fields. Understanding entropy, symbolized by "S," is crucial to grasp the fundamental laws governing the universe and the intricate interplay between order and disorder in diverse systems. From the microscopic dance of particles to the macroscopic evolution of the cosmos, entropy's influence is profound and pervasive. Its symbol, a simple "S," represents a concept of remarkable depth and far-reaching implications.

Latest Posts

Latest Posts

-

What Do The Earth And The Moon Have In Common

Apr 07, 2025

-

What Is The Oxidation Number Of Phosphorus

Apr 07, 2025

-

Does Hydrogen Bonding Increase Boiling Point

Apr 07, 2025

-

What Is The Lcm Of 9 And 7

Apr 07, 2025

-

1 2 4 8 16 32

Apr 07, 2025

Related Post

Thank you for visiting our website which covers about What Is The Symbol For Entropy . We hope the information provided has been useful to you. Feel free to contact us if you have any questions or need further assistance. See you next time and don't miss to bookmark.